Word Embeddings and the Shape of Meaning

If vector space is where numbers learn to mean things,

then word embeddings are how words find their place inside it.

Every time an AI model reads text — whether it’s a line of dialogue, a puzzle clue, or your latest prompt — it first turns those words into vectors.

It can’t think in symbols like we do.

It has to translate language into geometry.

From Symbols to Meaning

Human language is built from symbols — patterns of sound or text that stand in for thoughts.

Human language is built from symbols — patterns of sound or text that stand in for thoughts.

When someone speaks Russian and you don’t understand a word of it, the sounds hit your ears but never connect to meaning.

Your brain hasn’t built the mapping yet — the vector space where those sounds live.

Inside your mind, every familiar word has a location — formed from every time you’ve heard, read, or used it.

That’s why “cat” and “kitten” feel related, and “spooky” sits close to “Halloween.”

Your neurons aren’t storing dictionary definitions; they’re storing statistical relationships built from experience.

That’s exactly what word embeddings do for machines.

How Word Embeddings Work

When training embeddings, a model reads millions (or billions) of sentences and learns which words appear near which others.

When training embeddings, a model reads millions (or billions) of sentences and learns which words appear near which others.

Each word is represented as a vector — a list of floating-point numbers, often 100 to 300 long.

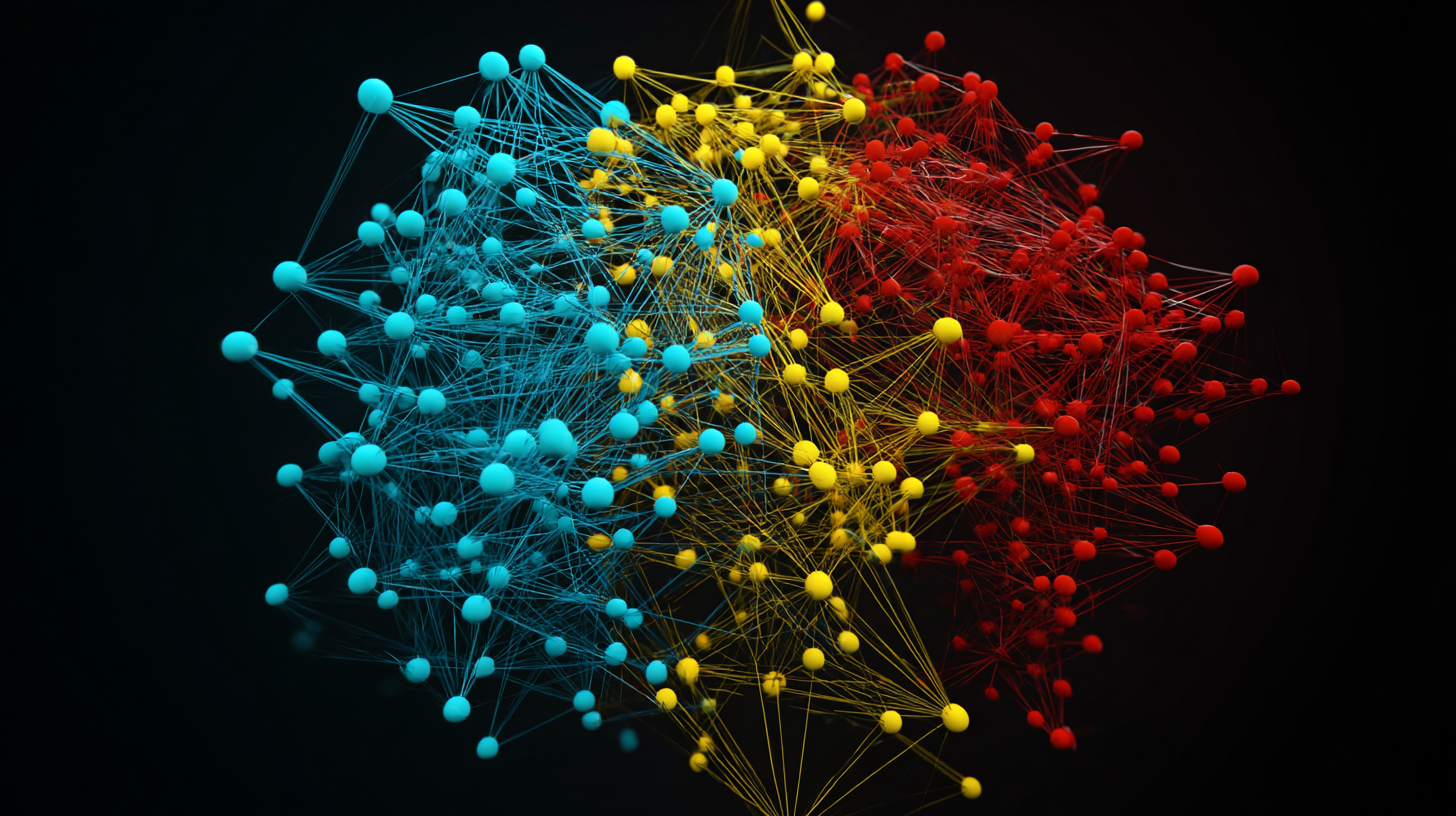

As training proceeds, those vectors shift until the geometry starts to make sense:

- Words that appear in similar contexts move closer together.

- Words that never co-occur drift apart.

- Relationships emerge as directions in space:

King – Man + Woman ≈ Queen.

That’s not magic — it’s statistics turning into structure.

Frameworks like Word2Vec, GloVe, and FastText learned these patterns by sliding a small window (say, five words before and after) across massive text corpora — Wikipedia, news archives, and the open internet.

Each pass updates the vectors slightly, until the network has literally mapped the language.

Why Pre-Trained Embeddings Matter

Training embeddings well takes time, data, and compute.

Training embeddings well takes time, data, and compute.

Billions of words, thousands of GPU hours, and careful tuning.

That’s why open sets like GloVe and FastText are so valuable — they capture a general map of English (or any language) that you can reuse as a foundation.

Once you have that base, you can fine-tune or retrain smaller embeddings for your own domain — say, the vocabulary of your game.

You can also prune the model down to only the words you need, dramatically reducing size and load time.

And you can scale the vectors themselves — shrinking from 300 dimensions to 128 or even 64 — while maintaining enough resolution for your use case.

It’s just like any other optimization in game development: you tune fidelity to fit performance.

Same idea — only now, you’re optimizing meaning.

Custom Embeddings for Games

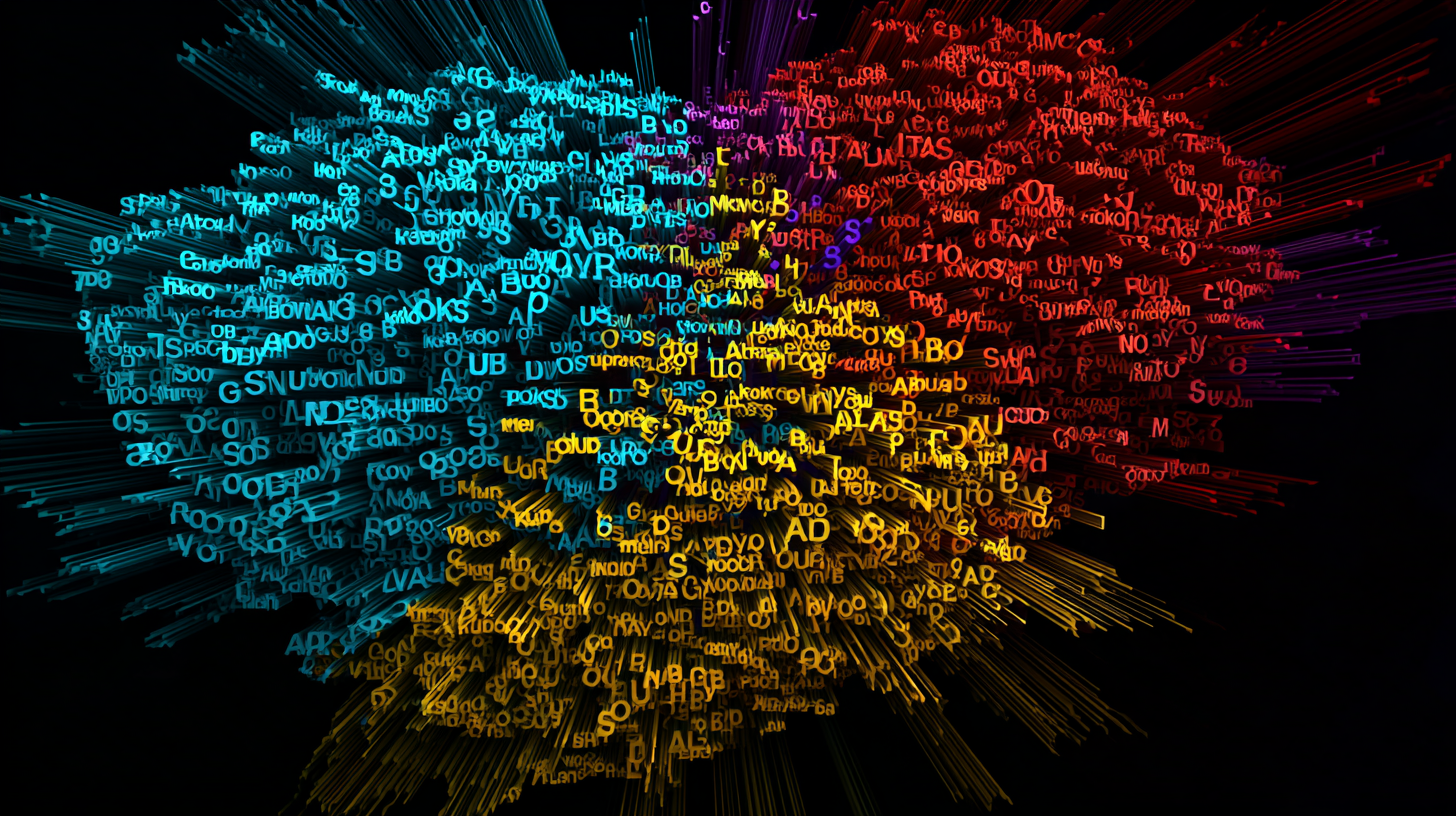

Imagine training embeddings on every level name, clue, and player chat log in your word game.

Imagine training embeddings on every level name, clue, and player chat log in your word game.

The model would start to learn your tone of language — how “twist,” “spark,” and “fusion” relate inside your universe.

You could also train thematic embeddings — for example, on the Harry Potter novels to build a fantasy vocabulary space where “wand,” “spell,” and “patronus” orbit near each other.

Then, when your puzzle generator searches for related words, it’s not guessing from a dictionary — it’s navigating a learned world of meaning.

That’s the power of embeddings:

they let your system reason about semantic distance instead of string matching.

“Spooky” becomes near “ghost” and “pumpkin,” not just words that share a prefix.

Why This Matters

For game developers, embeddings open new frontiers:

For game developers, embeddings open new frontiers:

- Procedural creativity: Generate puzzles, levels, and clues that feel on-theme — spooky for Halloween, cozy for fall, sci-fi for event week.

- Player modeling: Represent each player’s vocabulary and preferences as a vector, and measure overlap with upcoming content.

- Adaptive gameplay: Match players to words, themes, and levels that resonate with them personally — so the game gradually becomes the game you want when you play it.

- Search and matching: Find semantically similar words, phrases, or puzzles without hand-written rules.

Word embeddings give you a continuous space where meaning has shape — and where your content, your theme, and your players all live together.

That’s how adaptive design stops being a buzzword and starts being a system.

Closing

Language isn’t just stored — it’s shaped.

Language isn’t just stored — it’s shaped.

Embeddings reveal that shape, turning words into points on the map of meaning.

The tools are easy.

The mindset is the work.

Every developer should experience their first model not just learning to see —

but learning to understand.